Documentation of ASIR’s 2nd year final project by Juan Jesús Alejo Sillero.

⚠️ DISCLAIMER: Please note that this article is a manual translation of the Spanish version. The screenshots may have been originally taken in Spanish, and some words/names may not match completely. If you find any errors, please report them to the author. ⚠️

Description

The goal of the project is to implement a static website using a GitHub Actions CI/CD pipeline that automatically deploys the website on AWS from the markdown files that we will upload to the repository. Specifically, the static files will be located in an S3 bucket.

This type of configuration is known as serverless since we completely dispense with having an instance/machine serving the content 24/7.

The infrastructure will be managed using Terraform.

Technologies to be used

Amazon Web Services (AWS)

- Amazon Web Services is a cloud service provider, providing storage, computing, databases and much more in terms of cloud computing.

The following AWS services will be used throughout this project:

-

IAM: Identity and Access Management is a service that allows us to manage access to AWS resources in a secure way. It will allow us to create a user with permissions to manage the resources to be created, avoiding, as recommended by best practices, using the root user of the account.

-

ACM: Amazon Certificate Manager allows us to manage SSL/TLS certificates for our domains.

-

S3: Amazon Simple Storage Service is an object storage service that provides scalability, data availability, security and performance. I will use it to store static web files.

-

CloudFront: Amazon CloudFront is a content delivery network (CDN) service that allows us to deliver content to users around the world with low latency and high transfer speeds. It will be useful to improve web performance and reduce loading time. Although its impact will not be as noticeable in this project due to the small amount of content to be served, it is worth investigating its operation for future, more complex projects.

-

AWS CLI: AWS Command Line Interface is a tool that allows us to interact with AWS services from the command line. To upload static files to S3.

It is worth noting that AWS has different locations (regions) where resources can be deployed. My infrastructure will be located in the

us-east-1region (Northern Virginia) as it offers the most services and integrations.

Terraform

- Terraform is an Infrastructure-as-Code (IaC) tool that allows us to create, modify and version infrastructure securely and efficiently across different cloud service providers. In this project it will be used to create the required infrastructure on AWS.

Hugo

- Hugo is a static website builder framework (the fastest in the world according to its own website) written in Go. It allows me to generate the website from markdown files that I upload to the GitHub repository.

GitHub Actions

- GitHub Actions is a continuous integration and delivery (CI/CD) service that allows us to automate tasks. It will be responsible for detecting changes to the repository and performing the necessary steps to generate and deploy the web to AWS, calling Hugo, Terraform and AWS CLI in the process.

Expected results

The goal of the project would be to automatically generate and deploy a website and its infrastructure from the files we upload to the GitHub repository.

Any changes we make to the repository will be automatically reflected in the website.

Price

The project focuses on the ability to run a static (lightweight) website at no cost, so the free tiers of AWS will be used (list of free services).

The only cost to consider is the cost of registering a domain, which can be less than €2 per year depending on the provider.

We could get a domain from Route 53 and avoid leaving the AWS ecosystem, but this is usually more expensive than other providers (depending on availability and offers).

Why automate the deployment of a static website?

Task automation is a widespread practice in the IT world, and especially in the DevOps philosophy. It allows us to save time and effort, as well as reducing the possibility of making mistakes.

For this reason, it is interesting to start learning with a smaller project like this one, which will allow us to familiarise ourselves with the technologies to be used and serve as a basis for more complex projects.

Preparing the environment

Before you start setting up the infrastructure, you need to prepare your working environment.

If you do not already have an account on Amazon Web Services and GitHub, you will need to create one before proceeding.

Setting up MFA

If you have not already done so, or if you have just created an account on [AWS] (https://aws.amazon.com/), it is advisable to configure multi-factor authentication (MFA). After all, AWS is a service that can cost you a lot of money, so any protection against credential theft is worth considering.

To do this, we will access the AWS (web) console and search for the IAM service. Once accessed, we will be warned that the account does not have MFA enabled. Follow the instructions and let it run.

In my case, as an Android user, I have and recommend the Aegis application as it is open source and has worked very well for me (in addition to its many configuration options, which I will not go into here). For iOS users, Raivo OTP is an alternative.

With MFA enabled, the next step is to create an alternate user to the root user of the AWS account.

IAM user creation

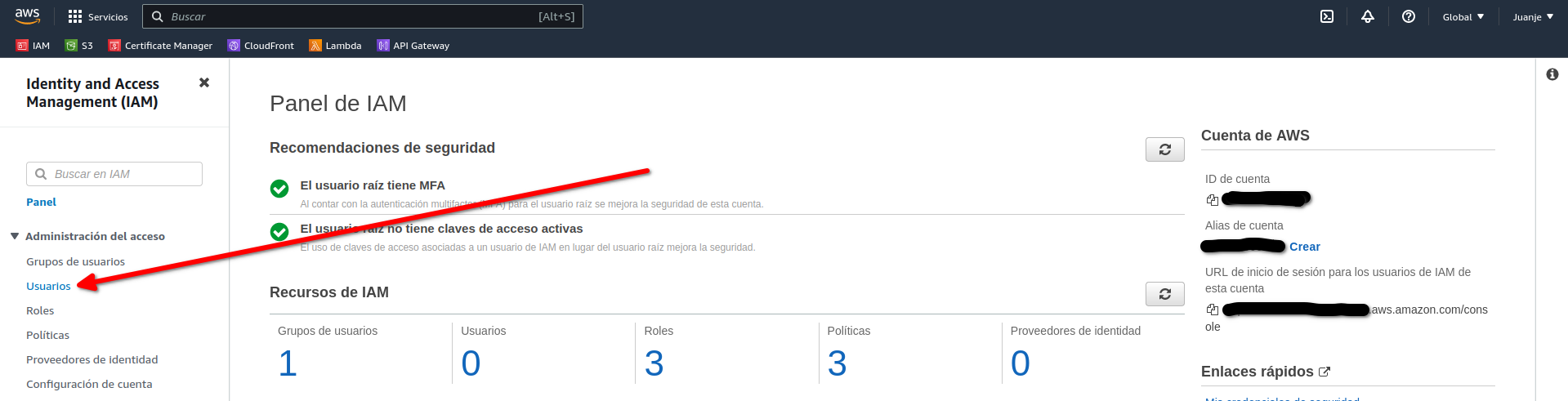

We will create a user with programmatic access. Log in to the AWS (web) console as root and locate the IAM service:

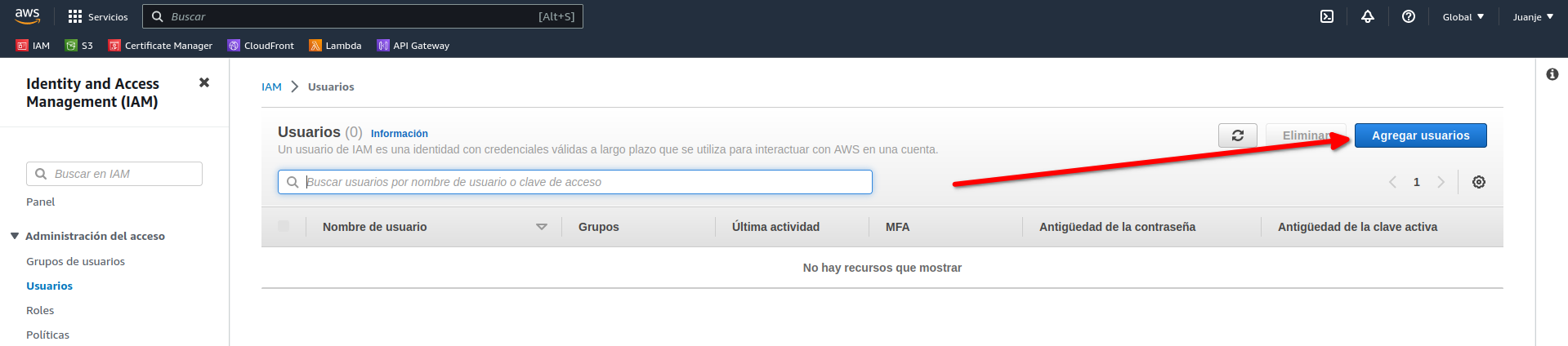

Navigate to the Users section and create a user:

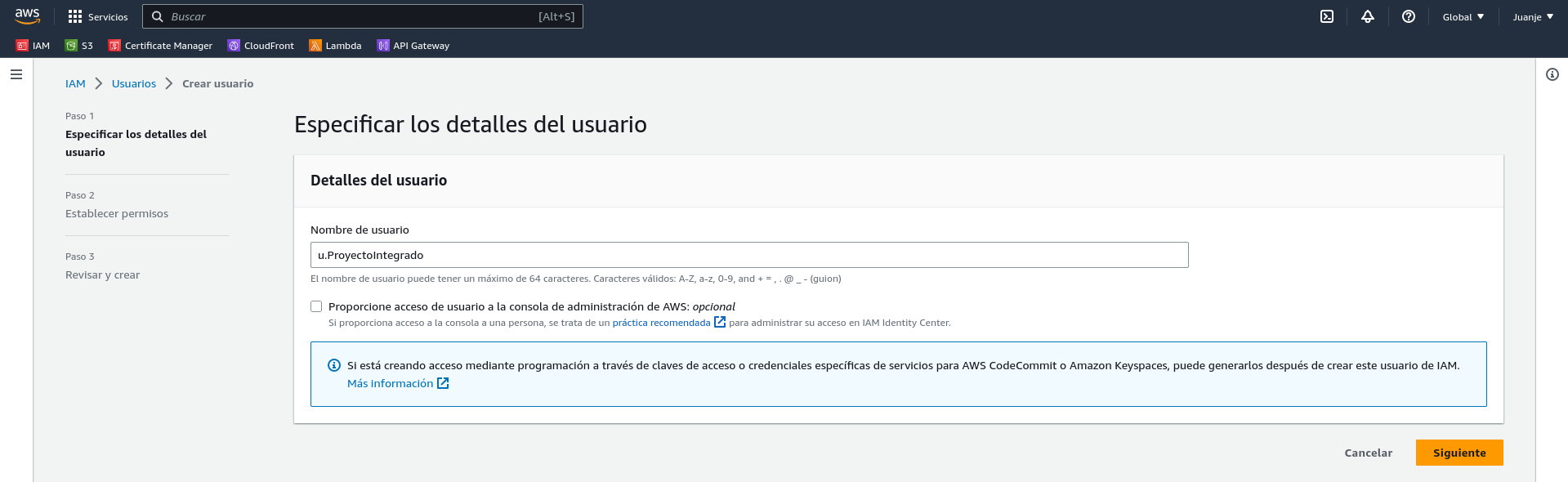

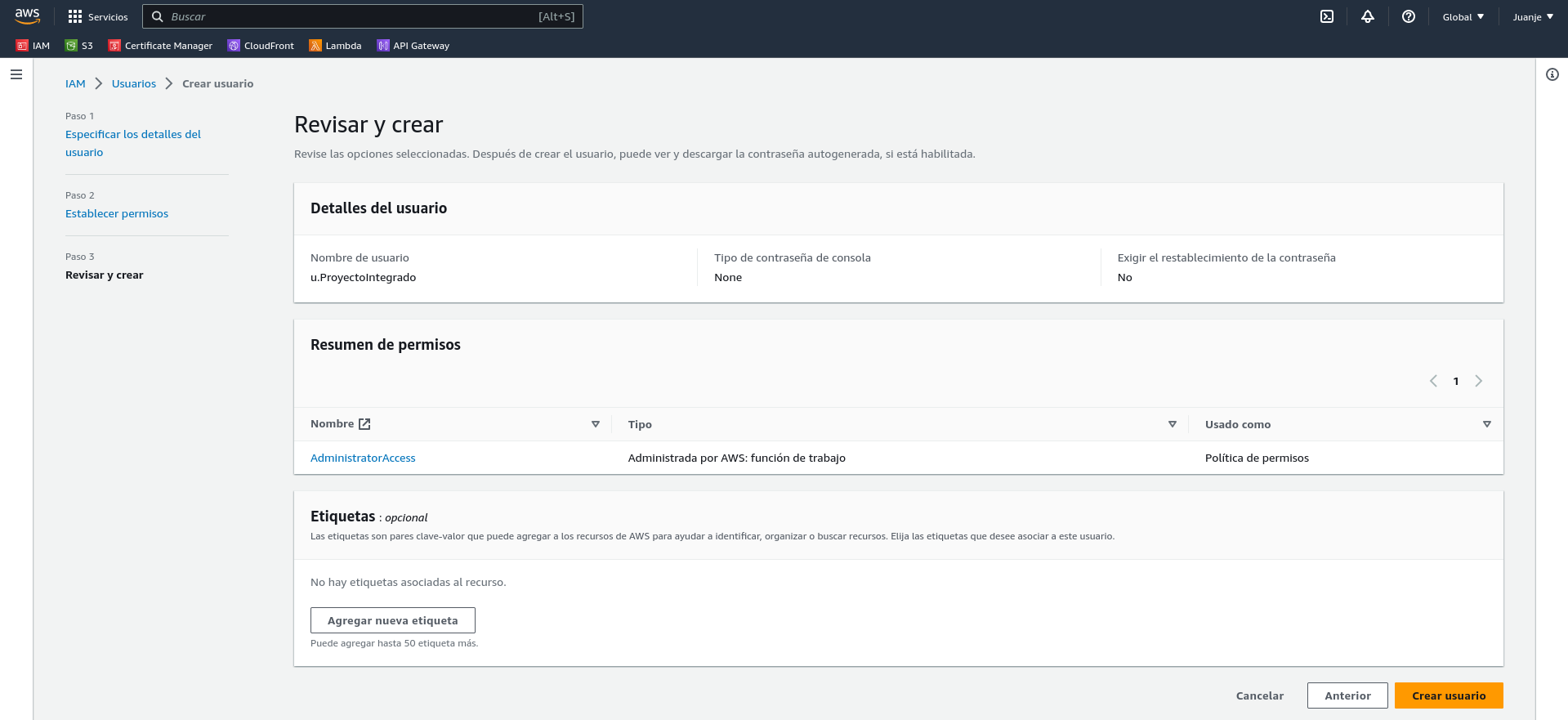

We give it a name (u.ProyectoIntegrado):

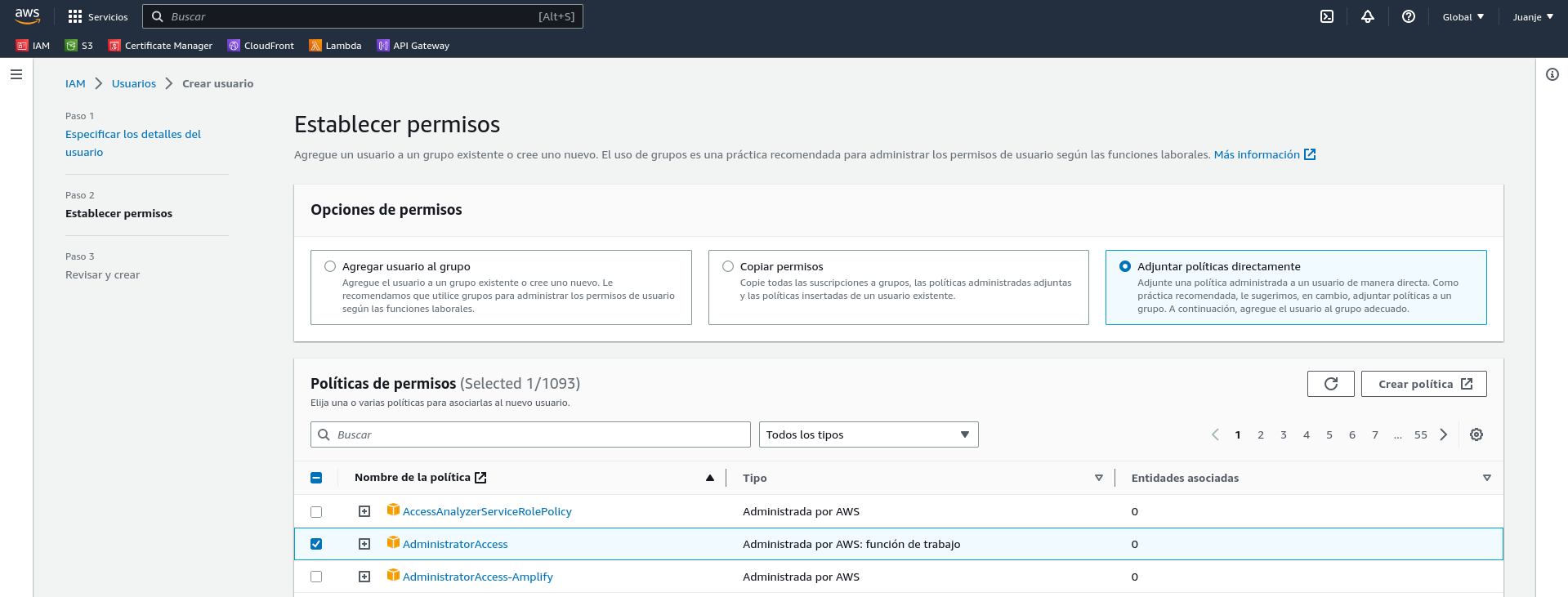

In the next screen we select the Attach Policies Directly option and search for the AdministratorAccess policy:

Click Next, assign labels if necessary, check that everything is correct and create the user:

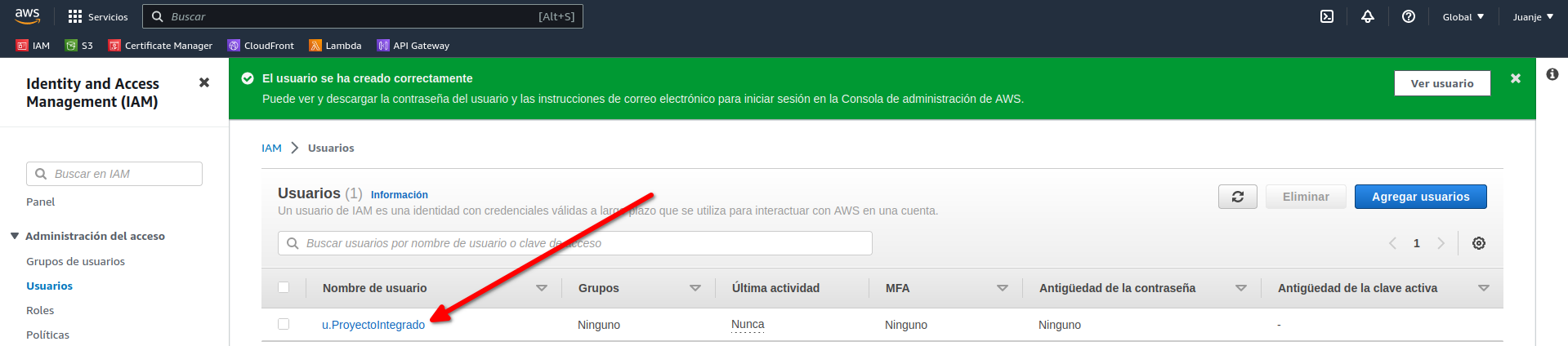

Once the user has been created, we need to generate some credentials. To do this, click on the user:

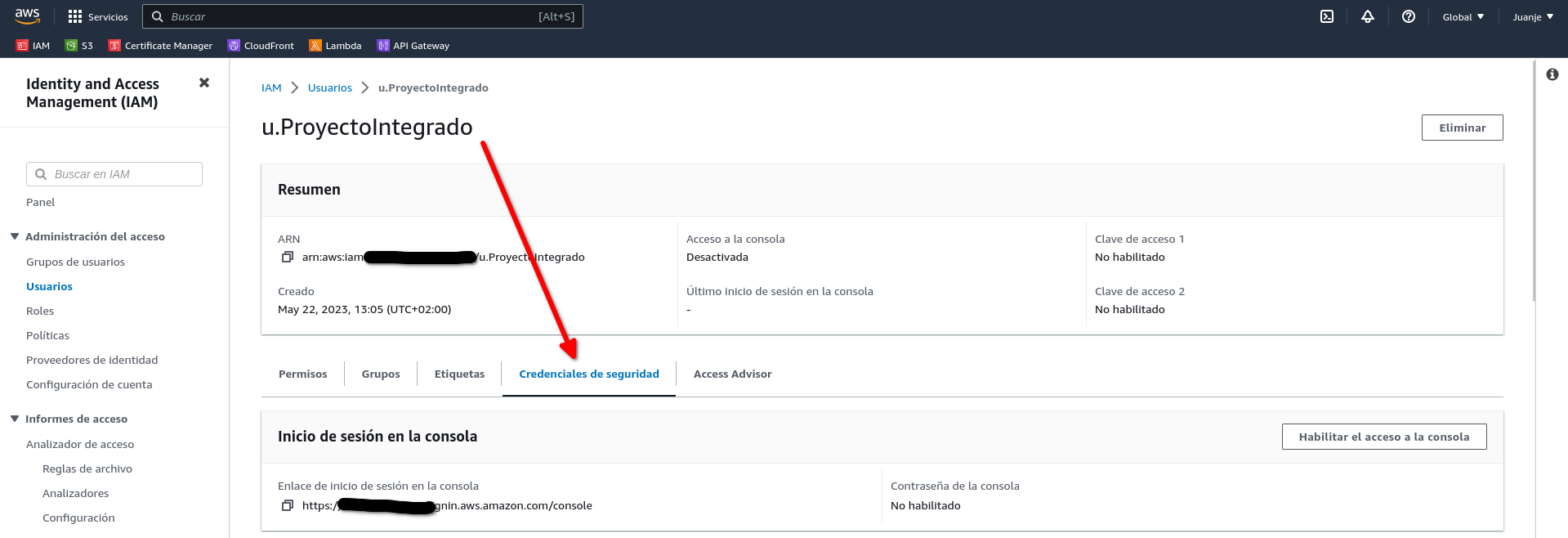

And select Security credentials:

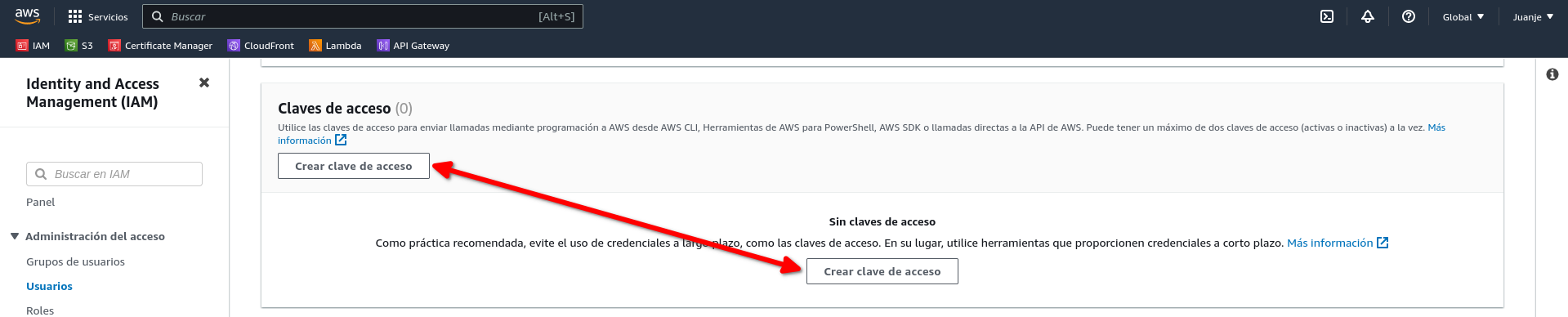

Scroll down to the Access Keys section and select Create Access Key:

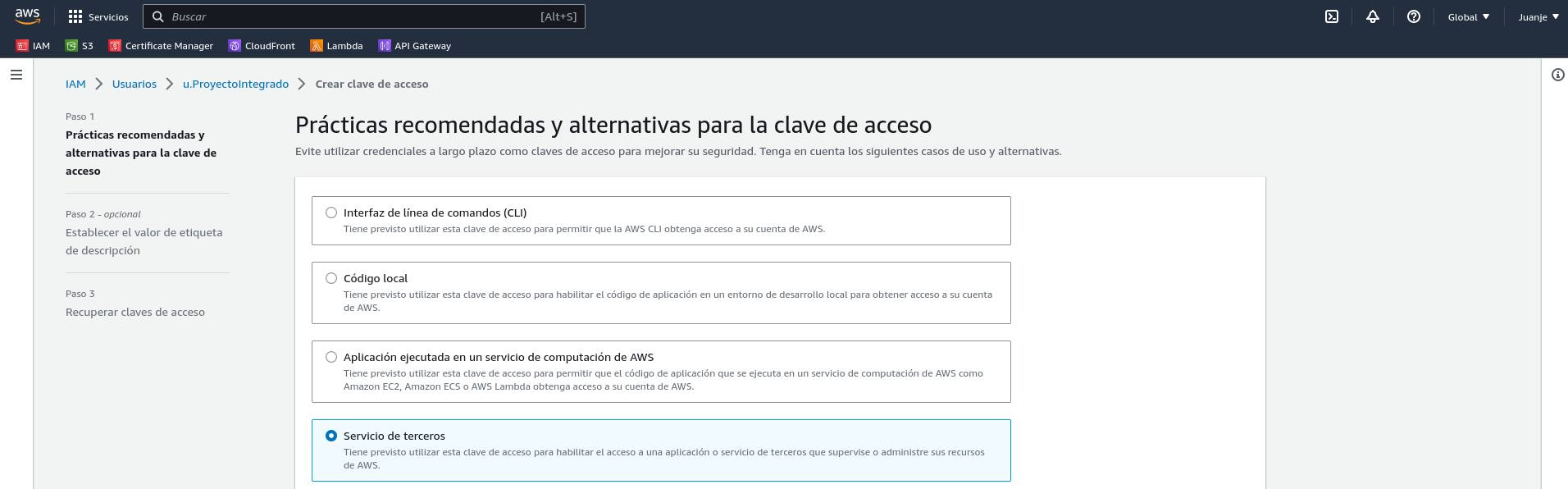

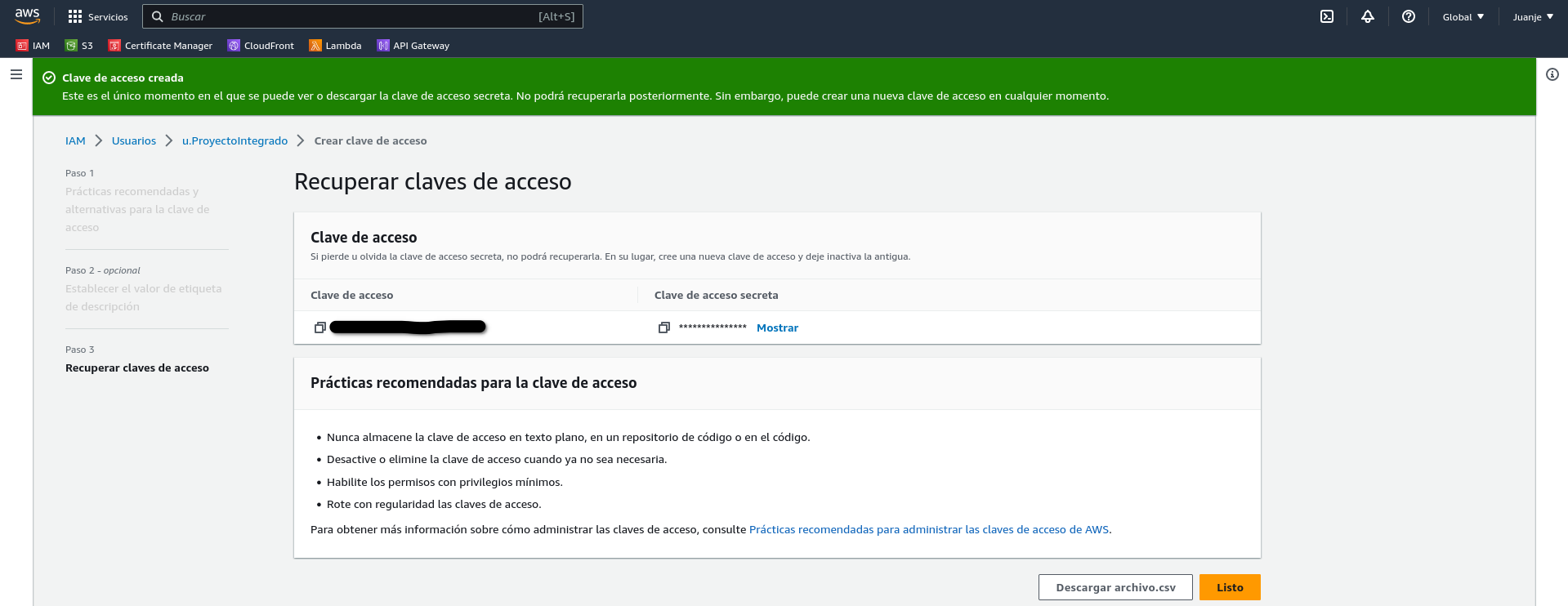

Select the use case:

In the next screen, we can give the key a description if we wish. After that, we have finished creating the key:

IMPORTANT: At this point we will be shown the Access Key and the Secret Key. We must keep these in a safe place as we will not be able to access the secret key again.

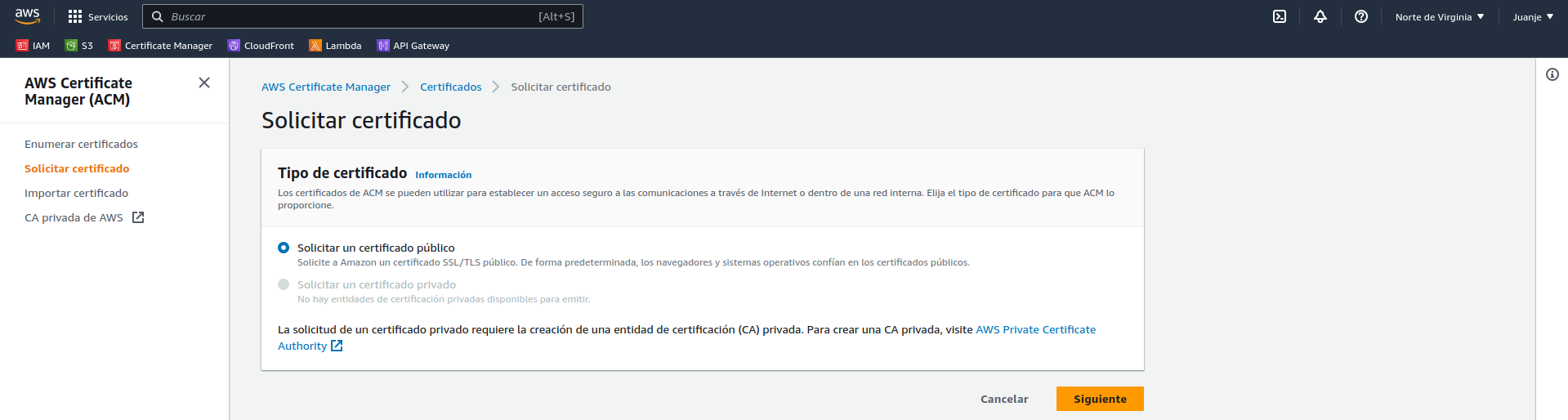

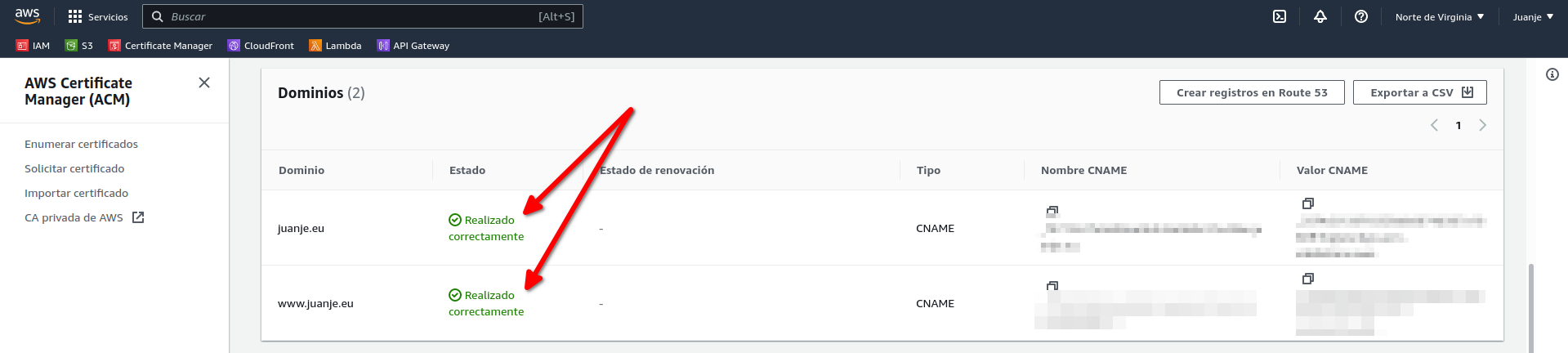

Creating an SSL Certificate

In order to use HTTPS on our website, we need an SSL certificate. To get one for free and easily, I will use AWS Certificate Manager (ACM).

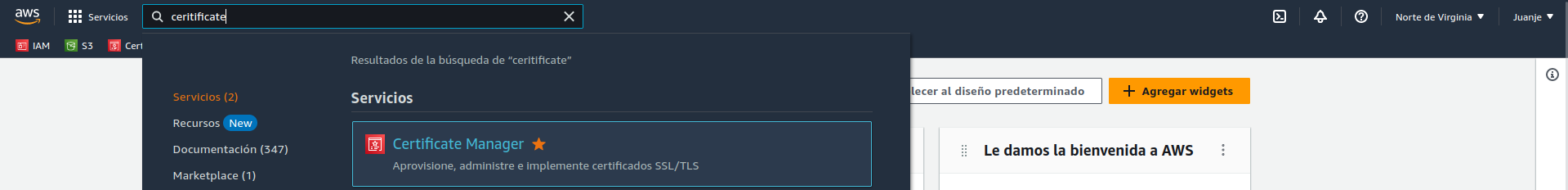

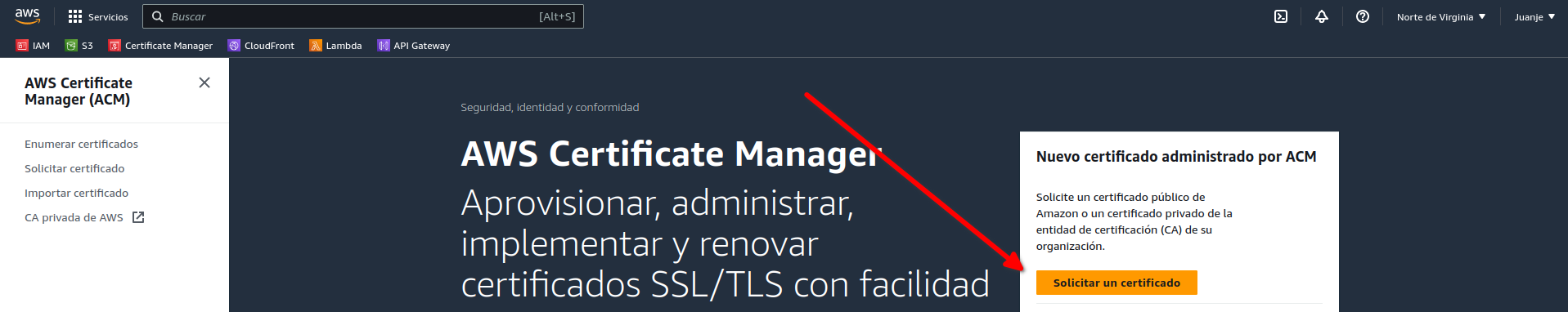

We go to the AWS (web) console and look for the ACM service:

Select Request a Certificate and choose Public:

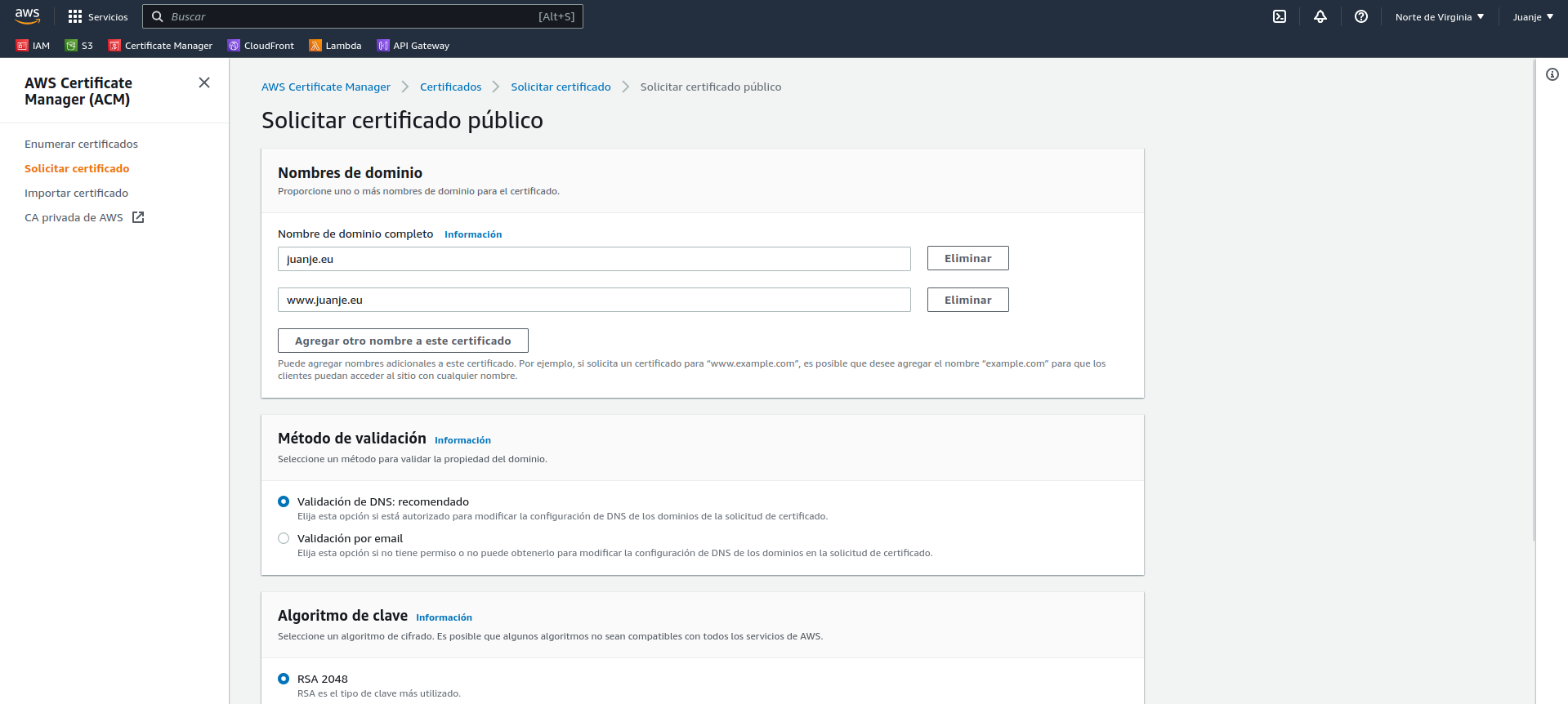

In the next screen we enter our domain (in my case juanje.eu and www.juanje.eu), the rest of the options can be left by default (DNS validation and RSA 2048):

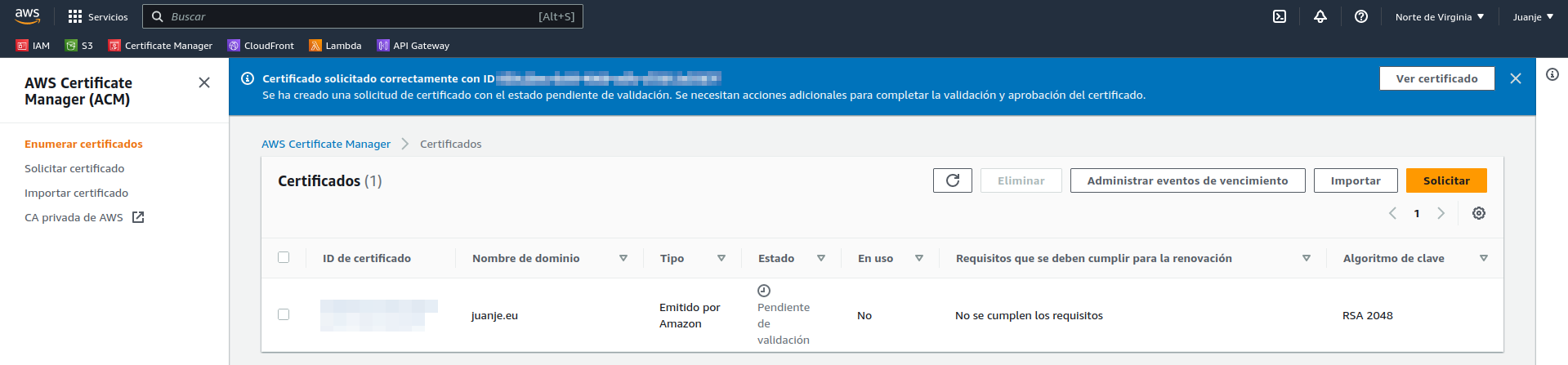

When finished, we will see the certificate and a warning that it is pending validation:

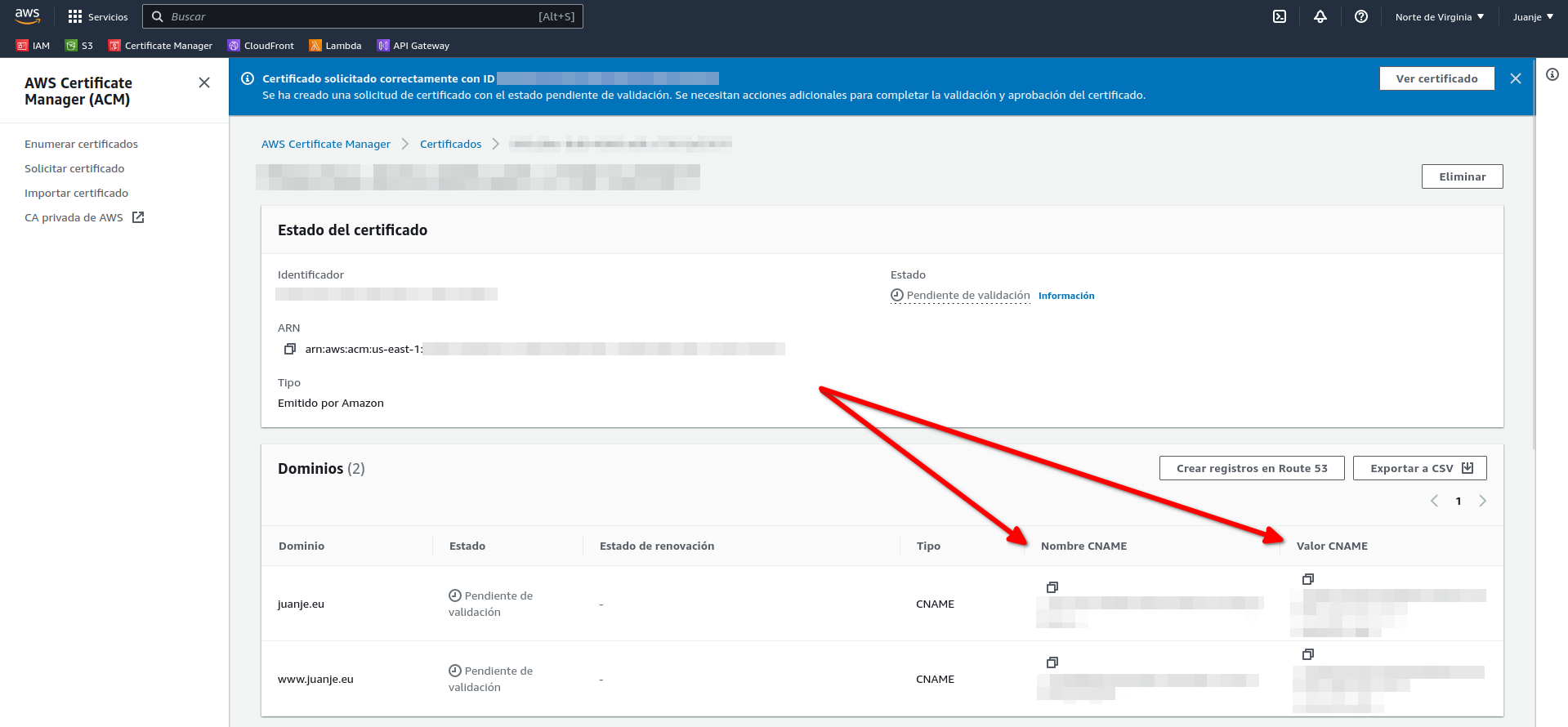

To validate it, we need to create a DNS record, the data to be used can be seen if we open the certificate:

We copy the data and go to our domain provider, add the corresponding DNS records and wait for them to be validated:

After a few minutes (waiting time may vary) the certificate is validated:

Now that we have our SSL certificate, we can move on to creating the repository to upload all the code we are going to create next.

Creating the GitHub repository

I will be using Git and GitHub to manage the code. First, I will create a repository on GitHub called Proyecto-Integrado-ASIR:

Then I create a local folder where I will put all the code I create and connect it to my remote repository:

mkdir Proyecto-Integrado-ASIR

cd Proyecto-Integrado-ASIR

echo "# Proyecto-Integrado-ASIR" >> README.md

git init

git add README.md

git commit -m "first commit"

git branch -M main

git remote add origin https://github.com/JuanJesusAlejoSillero/Proyecto-Integrado-ASIR.git

git push -u origin main

This will have created our repository and connected it to our local folder, ready to store code.

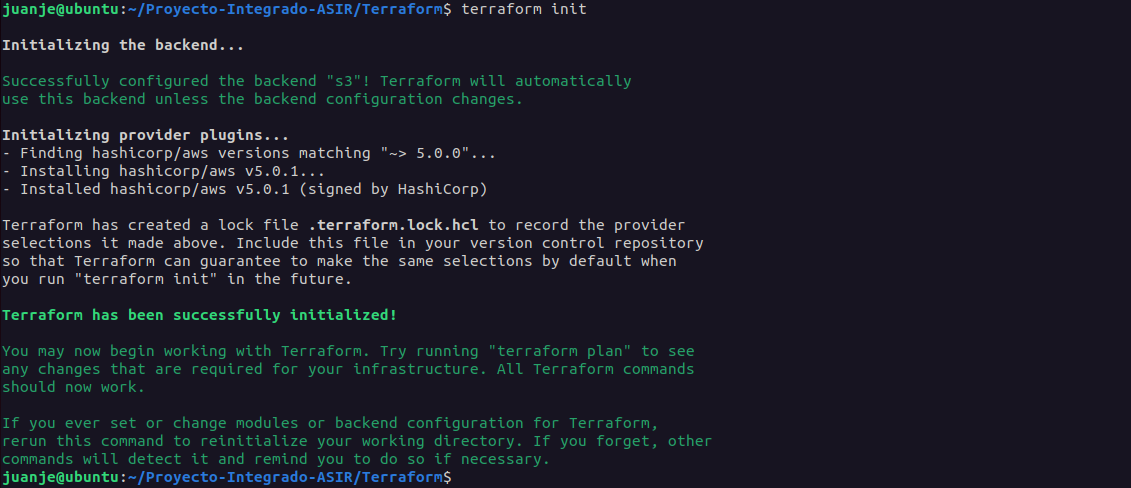

Terraform

To set up the infrastructure I will be using Terraform, an open source tool developed by HashiCorp that allows us to create, modify and version it in a simple (declarative) way.

Terraform configuration files are written in HCL (HashiCorp Configuration Language).

Its operation is divided into 3 phases, which work as follows

-

terraform init: Initialises the project, downloading the necessary plugins for the providers we have declared in its configuration (in our case, AWS).

-

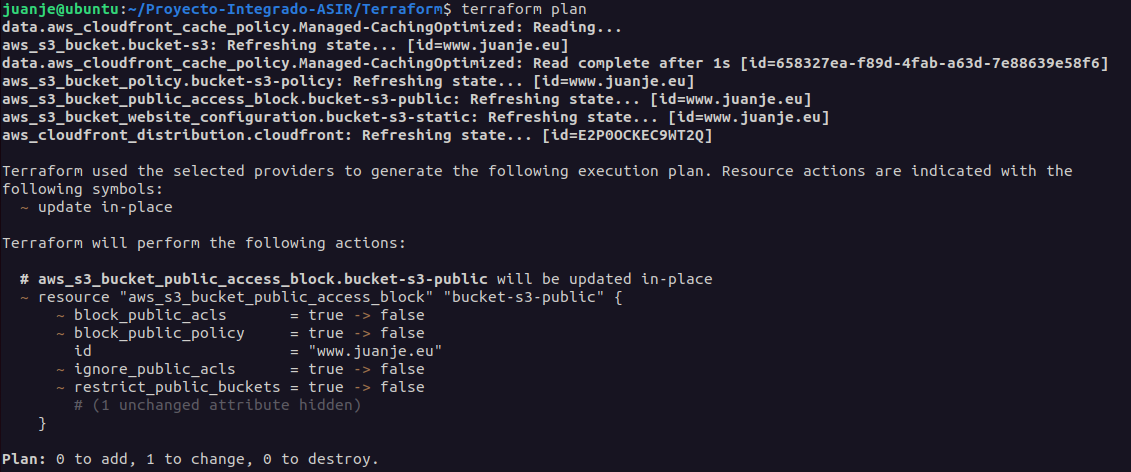

terraform plan: Shows the changes to be made based on the configuration files we have written.

-

terraform apply: Applies the changes shown in the previous phase.

Each object managed by Terraform is called a resource, this can be an instance, a database, a certificate, etc.

The lifecycle of the resources is completely managed, from the moment they are created until they are destroyed. Terraform will take care of creating, modifying and deleting the resources we have defined in the configuration files.

If a resource is modified in parallel via the AWS Web Console or another tool, when executing a terraform apply later, Terraform will take care of restoring the resource to the state defined by us in the configuration files, reversing any changes that may have been made.

Terraform stores changes to the state of the infrastructure in a state file. This file can be stored locally or on a remote backend.

Due to the nature of this project, the state file will be stored in an S3 bucket (remote backend), so we need to create it before starting with the Terraform configuration.

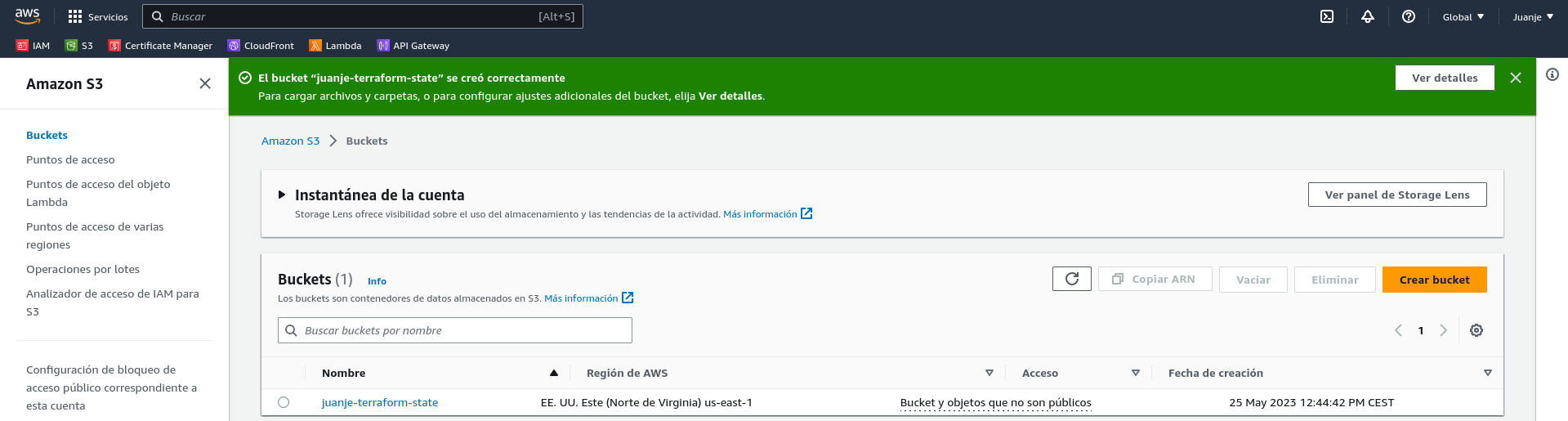

Create the S3 bucket for the remote state

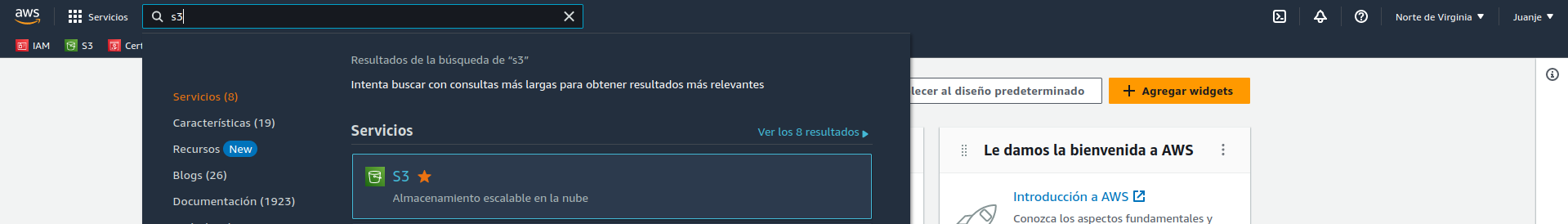

We access the AWS (web) console and look for the S3 service:

Create a bucket:

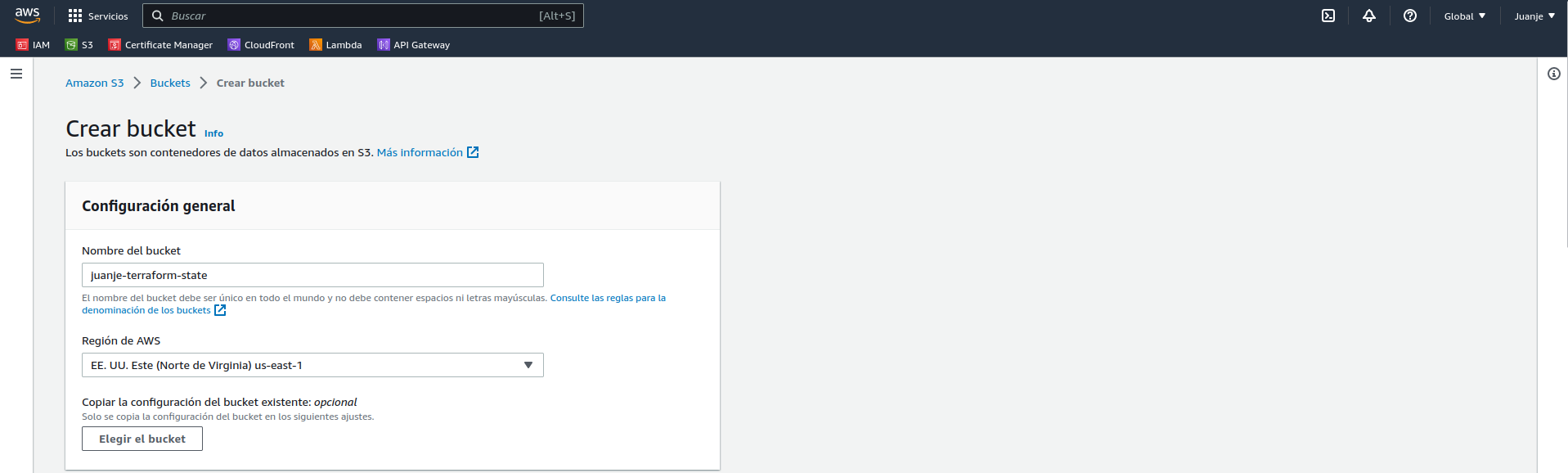

We give it a name that must be unique (juanje-terraform-state) and select the region where we want the bucket to be created (us-east-1), we won’t need to modify the other default options:

With the remote state bucket created, we can move on to the Terraform configuration.

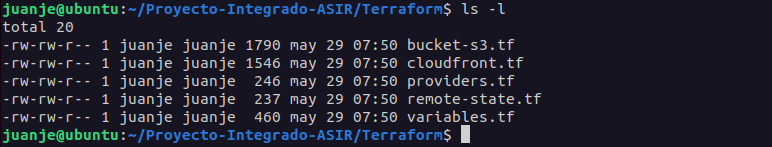

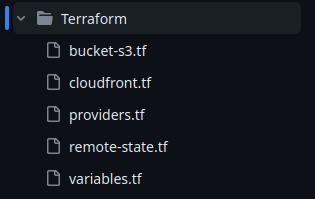

Configuring Terraform

To configure Terraform, I will create a folder called Terraform in the repository we created earlier. Now we have two options, create a configuration file for each resource or create one general configuration file for all resources. For ease of organisation I will use the first option, so I will create the following files (their names are not important, but the extension and content is):

-

remote-state.tf: Remote state configuration file.

-

provider.tf: Terraform providers configuration file. A provider is a plugin that allows us to interact with an infrastructure provider (in our case, AWS).

-

variables.tf: File containing the variables we will use in the resource configuration files.

-

bucket-s3.tf: S3 bucket configuration file.

-

cloudfront.tf: Configuration file for the CloudFront distribution.

The first file to create is the remote state file:

/*

remote-state.tf

Remote state configuration file for the Terraform + AWS project

*/

terraform {

backend "s3" {

bucket = "juanje-terraform-state"

key = "terraform.tfstate"

region = "us-east-1"

}

}

First, we specify that the backend we will use is S3 and pass it the name of the bucket we created earlier for this purpose, the name of the state file (we can also pass a path to it as state/file.tfstate) and the region where the bucket is located, it is quite simple.

Contents of provider.tf:

/*

providers.tf

Providers file for the Terraform + AWS project

*/

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0.0"

}

}

}

provider "aws" {

region = var.aws_region

}

In this file we specify our requirement for the AWS provider (hashicorp/aws) with a version equal to or higher than 5.0.0 (but allowing only minor version changes to avoid future compatibility problems). Additionally, we will provide the region to be used as a variable, which will be defined in the variables.tf file.

The variables.tf file:

/*

variables.tf

Variables file for the Terraform + AWS project

Variables en uso en los ficheros:

- providers.tf

- bucket-s3.tf

- cloudfront.tf

*/

variable "aws_region" {

type = string

default = "us-east-1"

}

variable "domain" {

type = string

default = "www.juanje.eu"

}

variable "aws_arn_certificado" {

type = string

default = "arn:aws:acm:us-east-1:540012000352:certificate/958c20ac-8c69-4809-adfc-c75812e3587f"

}

Here we define the AWS region we will be using, giving it the default value of us-east-1 (Northern Virginia), the domain of our website, which we will use to name the S3 bucket that will hold the static files, and the ARN (Amazon Resource Name) of the SSL certificate we created earlier.

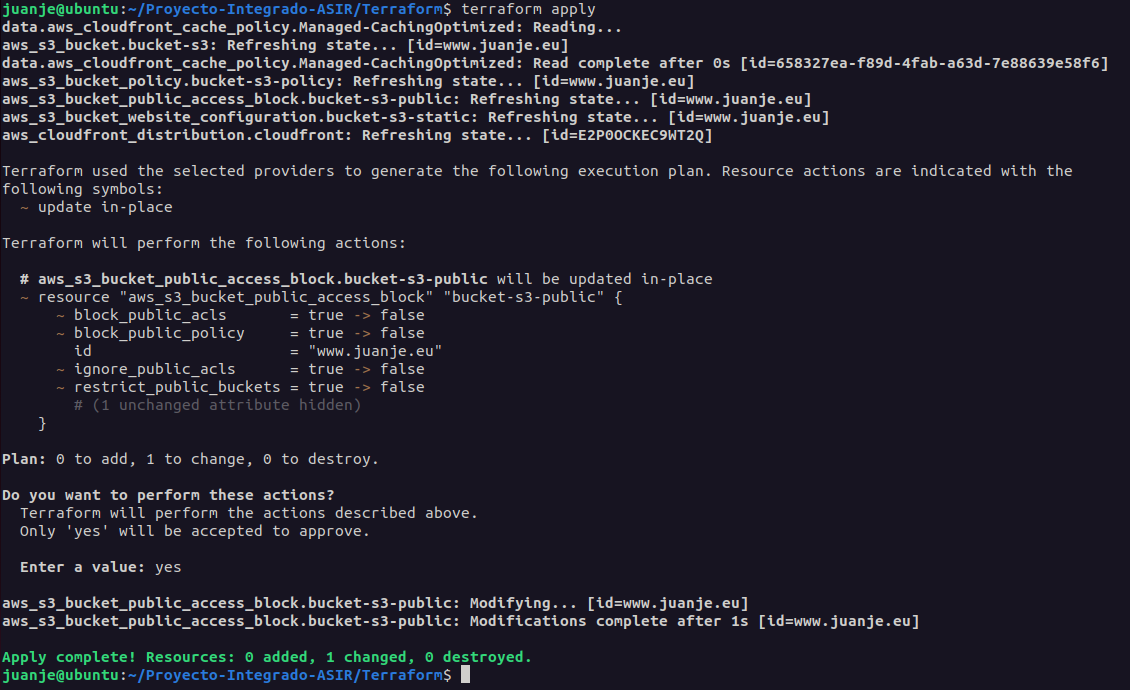

The file bucket-s3.tf:

/*

bucket-s3.tf

S3 bucket configuration file that will hold the static web files for the Terraform + AWS project.

*/

// Create the S3 bucket with the variable name "domain":

resource "aws_s3_bucket" "bucket-s3" {

bucket = var.domain

force_destroy = true

}

// Configuration to enable the storage of static websites in the S3 bucket:

resource "aws_s3_bucket_website_configuration" "bucket-s3-static" {

bucket = aws_s3_bucket.bucket-s3.id

index_document {

suffix = "index.html"

}

error_document {

key = "404.html"

}

}

// Settings to enable public access to the S3 bucket:

resource "aws_s3_bucket_public_access_block" "bucket-s3-public" {

bucket = aws_s3_bucket.bucket-s3.id

block_public_acls = false

block_public_policy = false

ignore_public_acls = false

restrict_public_buckets = false

}

resource "aws_s3_bucket_policy" "bucket-s3-policy" {

bucket = aws_s3_bucket.bucket-s3.id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Sid = "PublicReadGetObject"

Effect = "Allow"

Principal = "*"

Action = [

"s3:GetObject"

]

Resource = [

"${aws_s3_bucket.bucket-s3.arn}/*"

]

}

]

})

}

This one is a little denser than the previous ones, but not very complicated:

-

First, we create the S3 bucket with the variable name

domainand tell it to be forcibly destroyed when the resource is deleted, otherwise the bucket could not be deleted if it had content. -

Next, we configure the bucket to store static websites, specifying the name of the bucket and the names of the files that will be the main page and the error page.

-

Finally, we configure the bucket to be public and add a policy so that files can be read.

The final file, cloudfront.tf:

/*

cloudfront.tf

CloudFront distribution configuration file for the Terraform + AWS project

*/

// Caching policy for CloudFront distribution:

data "aws_cloudfront_cache_policy" "Managed-CachingOptimized" {

id = "658327ea-f89d-4fab-a63d-7e88639e58f6"

}

// Creating the CloudFront distribution:

resource "aws_cloudfront_distribution" "cloudfront" {

enabled = true

is_ipv6_enabled = false

aliases = [var.domain]

default_root_object = "index.html"

origin {

domain_name = aws_s3_bucket_website_configuration.bucket-s3-static.website_endpoint

origin_id = aws_s3_bucket_website_configuration.bucket-s3-static.website_endpoint

custom_origin_config {

http_port = 80

https_port = 443

origin_protocol_policy = "http-only"

origin_ssl_protocols = ["TLSv1.2"]

}

}

default_cache_behavior {

compress = true

viewer_protocol_policy = "redirect-to-https"

allowed_methods = ["GET", "HEAD"]

cached_methods = ["GET", "HEAD"]

target_origin_id = aws_s3_bucket_website_configuration.bucket-s3-static.website_endpoint

cache_policy_id = data.aws_cloudfront_cache_policy.Managed-CachingOptimized.id

}

price_class = "PriceClass_100"

viewer_certificate {

acm_certificate_arn = var.aws_arn_certificado

ssl_support_method = "sni-only"

minimum_protocol_version = "TLSv1.2_2021"

}

restrictions {

geo_restriction {

restriction_type = "none"

}

}

}

In its syntax, it is specified that

-

We define the cache policy we will use by its ID, which is the one recommended by AWS for static websites.

-

Then, we create the CloudFront distribution, specifying that it is enabled, does not use IPv6, that the domain name is the one from the

domainvariable, and the default file isindex.html. -

In the second half, we indicate that the origin of the distribution will be the S3 bucket we defined earlier, it can compress files, redirect all HTTP connections to HTTPS, specify the pricing class (geographical locations of CloudFront CDN), the SSL certificate it will use, and that it doesn’t impose any geographic restrictions.

With these 5 files, our entire infrastructure would be defined:

We upload them to GitHub:

git add .

git commit -m "Archivos Terraform"

git push

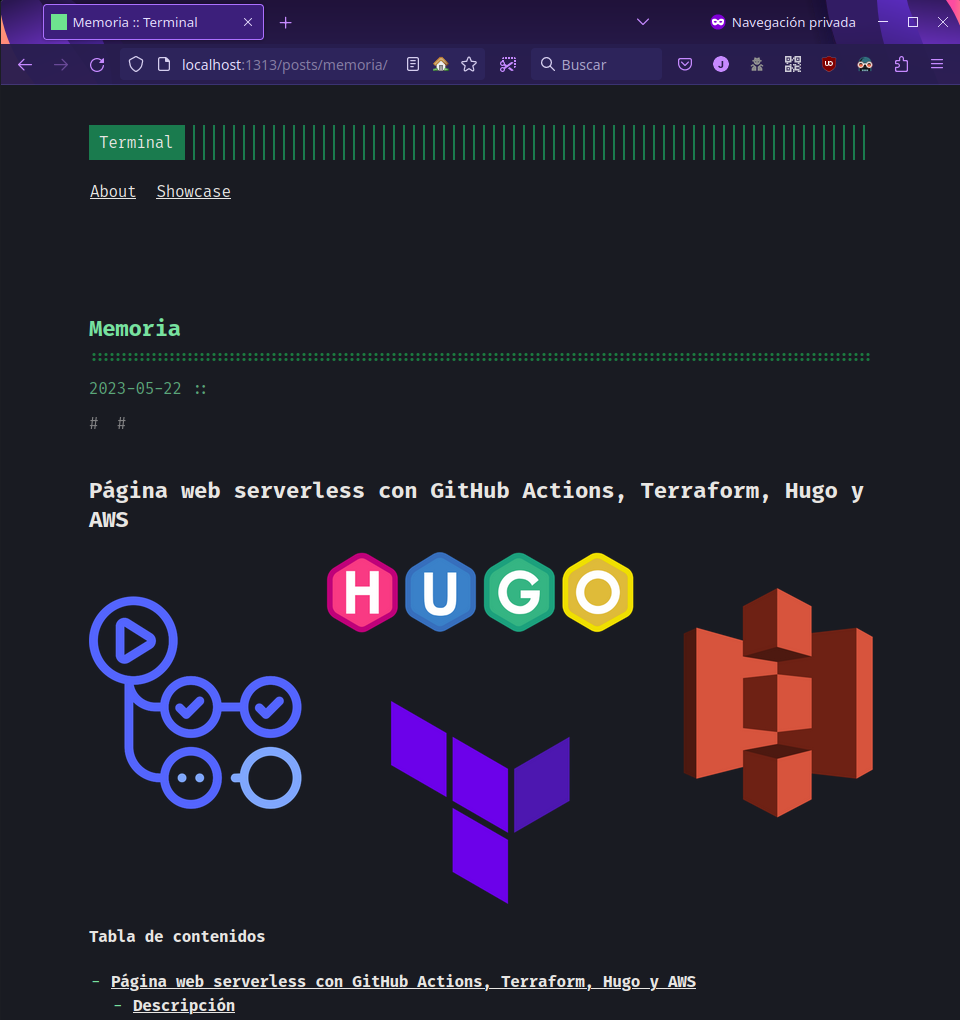

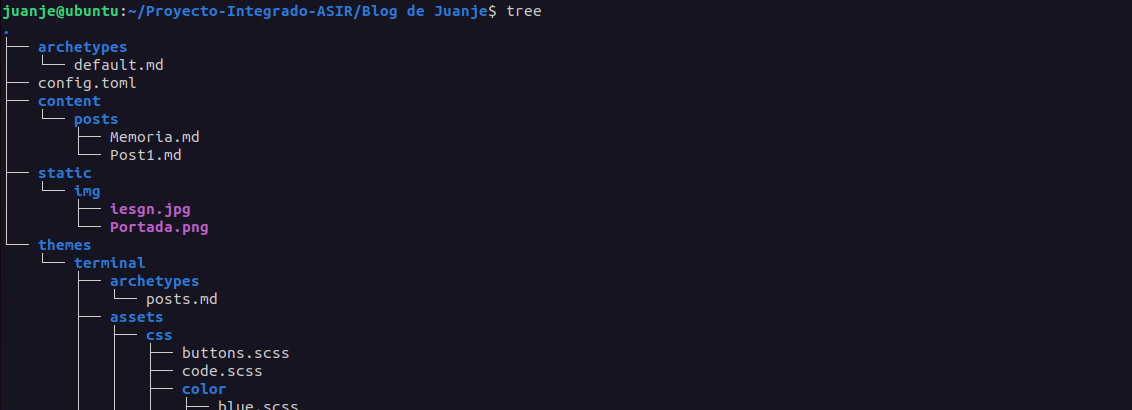

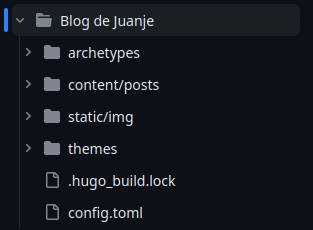

Hugo

To create the static website, I will use Hugo (extended). On Ubuntu 22.04, I have installed it as follows (instructions for other operating systems):

sudo apt update

sudo apt install hugo

We create the folder for the new site:

hugo new site "Blog de Juanje"

cd "Blog de Juanje"

We install the theme we want, in my case, terminal:

git init

git submodule add -f https://github.com/panr/hugo-theme-terminal.git themes/terminal

To configure it, I edit the config.toml file with the content provided in the documentation and customize it to my liking.

IMPORTANT: To avoid issues, as mentioned in this GitHub issue, we must remove the

baseURLparameter from theconfig.tomlfile. In my case, if I had it, the website didn’t function correctly after uploading it to AWS.

To create a new post, we run:

hugo new posts/mi-entrada.md

After writing it, we can see the result with:

hugo server -D

And accessing the provided address (usually http://localhost:1313/).

After checking that it works correctly, we remove the resources folder created when running the previous command:

rm -rf resources

It would look like this:

And we upload the content to GitHub:

git add .

git commit -m "Archivos Hugo"

git push

GitHub

GitHub Actions

To automate the process of creating the static website and uploading it to S3, I will use a GitHub Actions workflow. Workflows are configuration files written in YAML that allow automation of processes on GitHub. In my case, I will create one that runs every time a push is made to the directories containing Terraform or Hugo files.

The file will be named workflow.yaml and must be located in the .github/workflows folder:

mkdir -p .github/workflows

touch .github/workflows/workflow.yaml

The content of the file will be as follows:

#

# hugo-deploy.yaml

# GitHub Actions configuration file for:

# 1. Verifying and deploying the infrastructure with Terraform when a push is made to the "Terraform" or "Blog de Juanje" directories.

# 2. Building the website with Hugo and deploying it to S3 when a push is made to the "Blog de Juanje" directory.

#

name: "Workflow"

on:

push:

branches:

- main

paths:

- "Terraform/**"

- "Blog de Juanje/**"

env:

AWS_REGION: "us-east-1"

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

jobs:

terraform:

name: "Terraform"

runs-on: ubuntu-latest

defaults:

run:

working-directory: "Terraform"

steps:

- name: "Clone repository"

uses: actions/checkout@v3

- name: "Setup Terraform"

uses: hashicorp/setup-terraform@v2.0.3

- name: "Terraform Init"

timeout-minutes: 2

run: terraform init -input=false

- name: "Terraform Apply"

run: terraform apply -auto-approve

hugo-deploy:

needs: terraform

name: "Hugo + Deploy"

runs-on: ubuntu-latest

steps:

- name: "Clone repository including submodules (for the theme)"

uses: actions/checkout@v3

with:

submodules: recursive

- name: "Configure AWS CLI credentials"

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ env.AWS_REGION }}

- name: "Setup Hugo"

uses: peaceiris/actions-hugo@v2.6.0

with:

hugo-version: "latest"

extended: true

- name: "Build"

run: |

cd "Blog de Juanje"

hugo --minify

- name: "Deploy to S3"

run: |

cd "Blog de Juanje/public"

aws s3 sync \

--delete \

. s3://www.juanje.eu

- name: "CloudFront Invalidation"

run: |

aws cloudfront create-invalidation \

--distribution-id ${{ secrets.CLOUDFRONT_DISTRIBUTION_ID }} \

--paths "/*"

It’s a fairly straightforward file, but I’ll detail some parts:

-

First, the name of the workflow and when it should run are specified. In this case, it will run when a push is made to the

mainbranch and any file in theTerraformorBlog de Juanjedirectories is modified. -

Next, the environment variables to be used in the workflow are defined. In this case, the AWS credentials and the region.

-

Then, the jobs to be executed are defined. Two in this case:

-

terraform: It will run on an Ubuntu virtual machine and will be responsible for verifying and deploying the infrastructure with Terraform if necessary. To do this, it will first clone the repository, install Terraform, initialize it, and apply the plan. -

hugo-deploy: It will run on another Ubuntu virtual machine and will be responsible for building the website with Hugo and deploying it to S3, but it will only execute if the terraform job completes successfully, thus avoiding any attempt to upload the website files if the infrastructure is not ready. To achieve this, it will first clone the repository including the theme submodule, configure AWS CLI credentials, install Hugo, create the website files, sync them with our S3 bucket, and invalidate CloudFront cache to have the changes available immediately.

-

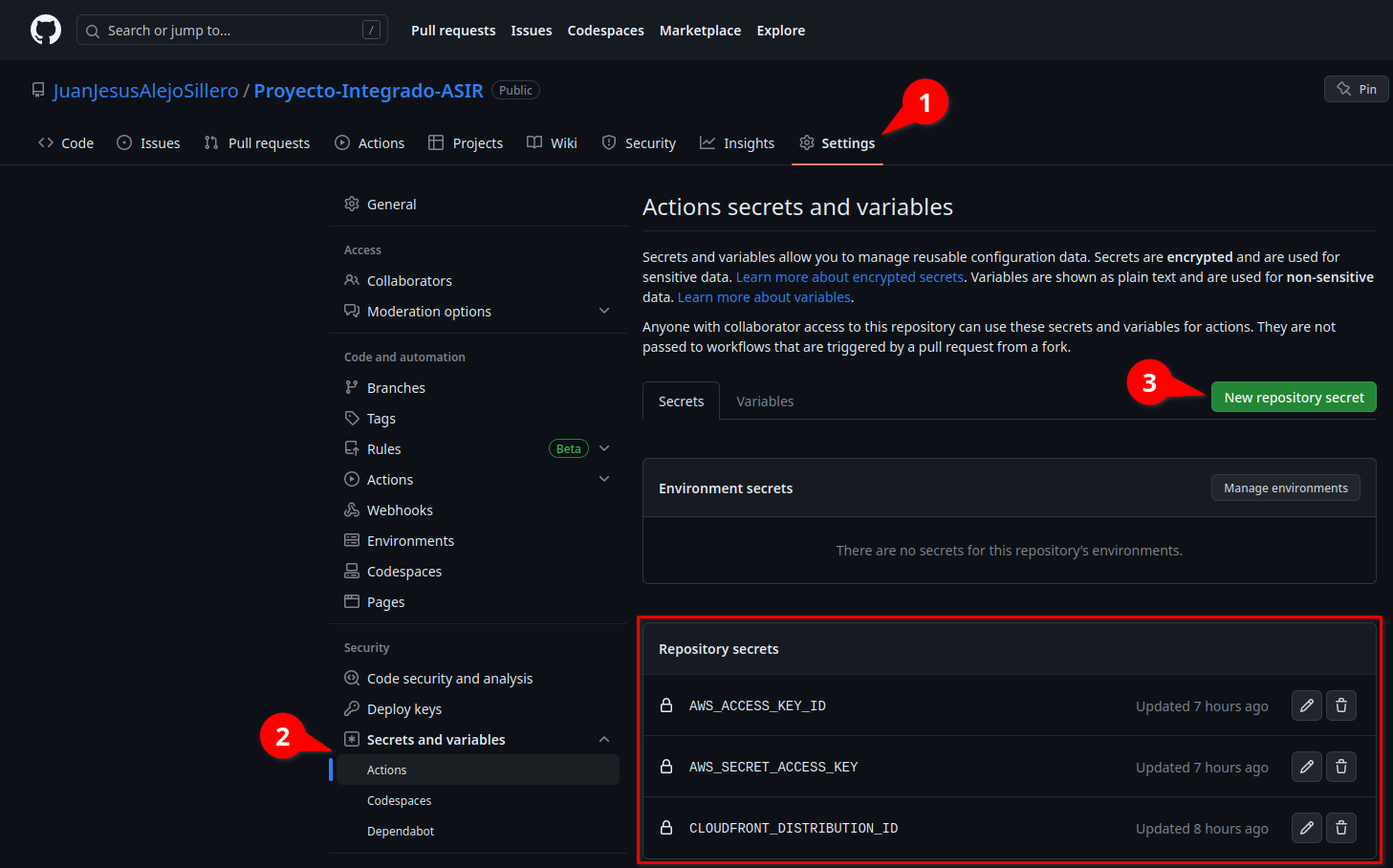

Secrets

For the workflow to run successfully, we need to create some secrets in our repository. To do this, on the GitHub website, go to Settings > Secrets and variables > Actions > New repository secret, and create the following secrets with their respective values:

Testing

At this point, we can now push to one of the directories specified in the workflow (Terraform or Blog de Juanje) and verify that everything runs correctly:

As we can see, the entire CI/CD pipeline has run successfully, and we now have our static website deployed, serverless, without worrying about managing the infrastructure or the HTML/CSS/JS code.

Useful links

In addition to the bibliography listed below, it’s important to mention that all the code for Terraform, Hugo, and GitHub Actions can be found in the GitHub repository of this project, in the Terraform, Blog de Juanje and .github/workflows directories respectively.

The full project documentation is this very post you are reading.

Bibliography

In order to carry out this project, I have collected and used bits from many, many sources, as I have not been able to find one that I could follow from start to finish without encountering a myriad of issues (outdated configurations, errors in the documentation, lack of clarity in the explanations, infrastructures that are too complex or incompatible with my project, etc.). I classify them below to try and facilitate the search for information.

Official documentation

Amazon Web Services

-

Choosing the price class for a CloudFront distribution - Amazon CloudFront

-

Setting permissions for website access - Amazon Simple Storage Service

-

Hosting a static website using Amazon S3 - Amazon Simple Storage Service

-

Regiones y zonas de disponibilidad de la infraestructura global

-

Regions, Availability Zones, and Local Zones - Amazon Relational Database Service

-

Installing or updating the latest version of the AWS CLI - AWS Command Line Interface

-

Automate static website deployment to Amazon S3 - AWS Prescriptive Guidance

Terraform

-

Provider Configuration - Configuration Language | Terraform | HashiCorp Developer

-

Syntax - Configuration Language | Terraform | HashiCorp Developer

-

Variables - Configuration Language | Terraform | HashiCorp Developer

-

The depends_on Meta-Argument - Configuration Language | Terraform | HashiCorp Developer

-

Input Variables - Configuration Language | Terraform | HashiCorp Developer

-

Host a Static Website with S3 and Cloudflare | Terraform | HashiCorp Developer

-

Automate Terraform with GitHub Actions | Terraform | HashiCorp Developer

-

aws_cloudfront_cache_policy | Data Sources | hashicorp/aws | Terraform Registry

-

aws_iam_policy_document | Data Sources | hashicorp/aws | Terraform Registry

-

aws_cloudfront_distribution | Resources | hashicorp/aws | Terraform Registry

-

aws_cloudfront_origin_access_identity | Resources | hashicorp/aws | Terraform Registry

-

aws_s3_bucket | Resources | hashicorp/aws | Terraform Registry

-

aws_s3_bucket_policy | Resources | hashicorp/aws | Terraform Registry

-

aws_s3_bucket_public_access_block | Resources | hashicorp/aws | Terraform Registry

-

aws_cloudfront_distribution | Resources | hashicorp/aws | Terraform Registry

-

aws_s3_bucket_website_configuration | Resources | hashicorp/aws | Terraform Registry

-

Automate Terraform with GitHub Actions | Terraform - HashiCorp Developer

Hugo

Git, GitHub and GitHub Actions

Blogs, forums, discussions, and text-based websites

-

foo-dogsquared/hugo-theme-arch-terminal-demo: Demo of the Hugo Terminal Plus-Minus theme

-

How to Set Up a Remote Backend for Terraform State File with Amazon S3

-

What happened to github.event.head_commit.modified? · community · Discussion #25597

-

How to Host a Website on S3 Without Getting Lost in the Sea | by Kyle Galbraith | Medium

-

amazon web services - Missing Authentication Token while accessing API Gateway? - Stack Overflow

-

github - Running actions in another directory - Stack Overflow

-

continuous integration - Dependencies Between Workflows on Github Actions - Stack Overflow

-

How Caching and Invalidations in AWS CloudFront works - Knoldus Blogs

-

How to manually invalidate AWS CloudFront | by Christina Hastenrath | Medium

-

Amazon S3 to apply security best practices for all new buckets - Help Net Security

-

AWS S3 Sync Examples - Sync S3 buckets AWS CLI | Devops Junction

-

permissions - Amazon Cloudfront with S3. Access Denied - Server Fault

-

amazon web services - How to delete an aws cloudfront Origin Access Identity - Stack Overflow

-

amazon web services - AWS CLI CloudFront Invalidate All Files - Stack Overflow

-

amazon web services - AWS CloudFront access denied to S3 bucket - Stack Overflow

-

Need help with Cloudfront, S3 https redirection error 504 : r/aws

-

How to deploy an S3 Bucket in AWS- using Terraform - Knoldus Blogs

-

How to Create an S3 Bucket with Terraform | Pure Storage Blog

-

Host a static website locally using Simple Storage Service (S3) and Terraform with LocalStack | Docs

-

Hosting a Static Website on AWS S3 using Terraform | by Shashwot Risal | Medium

-

amazon web services - Terraform - AWS IAM user with Programmatic access - Stack Overflow

-

Terraform Remote State Storage with AWS S3 & DynamoDB - STACKSIMPLIFY

-

Hosting a Secure Static Website on AWS S3 using Terraform (Step By Step Guide) | Alex Hyett

-

Deploy Hugo Sites With Terraform and Github Actions (Part 1) | Dennis Martinez

-

Deploy Hugo Sites With Terraform and Github Actions (Part 2) | Dennis Martinez

-

Build a static website using S3 & Route 53 with Terraform - DEV Community

-

Guide to Create Github Actions Workflow for Terraform and AWS - DEV Community

-

Deploy static website to S3 using Github actions - DEV Community

-

github - pull using git including submodule - Stack Overflow

Video tutorials

-

AWS Hands-On: Automate AWS Infra Deployment using Terraform and GitHub Actions - YouTube

-

Getting Started With Terraform | Terraform Tutorial | #3 - YouTube

-

AWS Tutorial - Website hosting with S3, Route 53 & Cloudfront using Namecheap domain - YouTube

-

Creating an IAM User and Generating Access Key on Amazon Web Services AWS - YouTube

-

Deploy Static Website to AWS S3 with HTTPS using CloudFront - YouTube

-

Static website hosting on Amazon S3 (with CloudFront) without enabling public access. - YouTube

-

Backends and Remote State | Terraform Tutorial | #17 - YouTube

-

AWS Hands-On: Automate AWS Infra Deployment using Terraform and GitHub Actions - YouTube

-

Terraform Tutorial for Beginners + Labs: Complete Step by Step Guide! - YouTube

✒️ Documentation written by Juan Jesús Alejo Sillero.